It’s no secret that Kubernetes doesn’t come standard with a lot of security features enabled. PodSecurityPolicies (PSP) was essentially a big security component, albeit not enabled by default, and very clunky, but if used correctly, it played a big part in ensuring that your pods adhere to a specific security standard. Since Pod Security Policy’s deprecation (version 1.21) a lot of businesses that utilize Kubernetes has raced to find a suitable replacement. A few security vendors have stood out, and some are stronger than others. Today we’ll take a look at Kyverno, a third-party component and self-proclaimed PSP replacement.

As mentioned, PSP is a way for you as admin, to guarantee that all your pods meet a specific standard security. We can create specific rules and if the requested pod specification (for example) doesn’t pass all the rule sets, it simply won’t be allowed into the cluster.

This is essentially the job of what is called an Admission controller in Kubernetes, and there are a number of these that can be activated, each performing a slightly different task.

Admission Controller - What are they?

You can think of admission controllers as the cluster police. Some are built-in and some are from third parties. There are two types of admission controllers; validating webhooks and mutating webhooks. They will “intercept” requests and either check them, to ensure they adhere to certain rules (validate), and reject them if they don’t, or they will edit them (mutate) to have them conform to the cluster rules. Kyverno performs both validating and mutating rules.

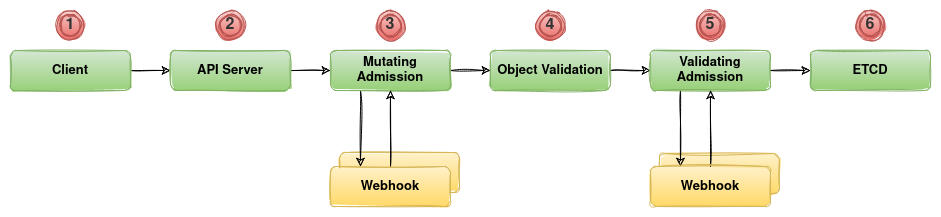

Let’s take a look at the object creation flow in a Kubernetes cluster. From when the user hits Enter on their ‘kubectl create deployment’ command to when the pods in that deployment get created.

Step 1: Client

Step1 is straightforward; the client (i.e. kubectl) sends a request to create a deployment. This is sent to the Kube-API server.

Step 2: API Server

The API server receives the request and performs all the authentication and authorisation, for example, is the user that’s making this request allowed to create this object (i.e. deployment) in this namespace? If the authentication and authorisation passes, the Kube-API server sends the request to the Admission Controller.

Step 3: Mutating Admission

The Mutating Admission Controller will receive the request, check it, add any missing parameters, or adjust any value specified based on their set of rules and logic. The most common example of mutating admission is adding required labels to a resource. This could be due to charge-back for example, so the pod should have a label of your cost-centre, or simply just to ensure label formatting.

In our case, Kyverno will perform these mutating actions.

Once done, the requested specification pass the mutated object spec to the next step.

Step 4: Object Validation

The new mutated spec goes through a set of standard validation checks to ensure that the mutated spec still makes sense. If all is well with the new spec, it gets passed on to the Validation webhook.

Step 5: Validating Admission

This step is where the spec is tested against a standard, or custom rules, set out by the validating admission controller. Kyverno can once again perform these checks for us. If the spec passes all rules, the object is finally allowed into the cluster, and we move on to step 6.

Step 6: ETCD

The object specification is finally written to the ETCD database, and the pod starts.

Enough talk, let’s get started

I’ve got K8S cluster up and running, which I will use to dive a little deeper into a few use-cases. First, we’ll take a look at our Kube-API’s configuration, and double-check that our webhooks are enabled. This will be done by default, but just out of interest, we see in the below output (my kube-apiserver runs as a container on the control-plane nodes) with all the admission plugins enabled, including MutatingAdmissionWebhook, as well as ValidatingAdmissionWebhook.

$ sudo docker inspect kube-apiserver | grep admission

"--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota,NodeRestriction,Priority,TaintNodesByCondition,PersistentVolumeClaimResize,AlwaysPullImages",

Before Installing Kyverno

To install Kyverno on our cluster, we have two options; either via Helm, or Kubectl. I’ll be following the Kubectl method. The Kyverno documentation suggests installing via a kubectl command pointing to a yaml file on github. i.e.

$ kubectl create -f https://raw.githubusercontent.com/kyverno/kyverno/main/config/install.yaml

NOTE It’s not advised to install applications on Kubernetes from random sources on the internet. It will be more secure to thoroughly inspect the objects that will be created, even look at the images used, and perform vulnerability scans before deploying. Using trivy we can quickly perform a scan of the images before deploying them to our cluster.

$ trivy i ghcr.io/kyverno/kyverno:latest

2022-03-04T12:09:56.993+0200 INFO Number of language-specific files: 1

2022-03-04T12:09:56.993+0200 INFO Detecting gobinary vulnerabilities...

kyverno (gobinary)

==================

Total: 0 (UNKNOWN: 0, LOW: 0, MEDIUM: 0, HIGH: 0, CRITICAL: 0)

$ trivy i ghcr.io/kyverno/kyvernopre:latest

2022-03-04T12:09:58.873+0200 INFO Number of language-specific files: 1

2022-03-04T12:09:58.873+0200 INFO Detecting gobinary vulnerabilities...

kyvernopre (gobinary)

=====================

Total: 0 (UNKNOWN: 0, LOW: 0, MEDIUM: 0, HIGH: 0, CRITICAL: 0)

We’re good to go!

Installing Kyverno

Once you’re happy and trust the images and Custom Resource Definitions (CRD) that you’re installing on your cluster, you can go ahead and install Kyverno:

$ kubectl create -f https://raw.githubusercontent.com/kyverno/kyverno/main/config/install.yaml

Easy enough. Let’s confirm everything is installed.

$ kubectl api-resources | grep admission

mutatingwebhookconfigurations admissionregistration.k8s.io false MutatingWebhookConfiguration

validatingwebhookconfigurations admissionregistration.k8s.io false ValidatingWebhookConfiguration

$ kubectl get mutatingwebhookconfigurations

NAME WEBHOOKS AGE

kyverno-policy-mutating-webhook-cfg 1 21h

kyverno-resource-mutating-webhook-cfg 2 21h

kyverno-verify-mutating-webhook-cfg 1 21h

rancher-monitoring-admission 1 140d

$ kubectl get validatingwebhookconfigurations

NAME WEBHOOKS AGE

ingress-nginx-admission 1 142d

kyverno-policy-validating-webhook-cfg 1 26s

kyverno-resource-validating-webhook-cfg 2 25s

rancher-monitoring-admission 1 139d

$ kubectl get pod -n kyverno

NAME READY STATUS RESTARTS AGE

kyverno-69f5b57499-6nmqs 1/1 Running 0 21h

Looks good!

Getting our hands dirty

Kyverno’s website and github repository are amazing libraries of policies ready to use, and the we can easily improve on these policies to suit our needs. For PSP replacement policies, check out these policies on their github. As a few examples of standardising your workloads and ensuring a top-notch security posture, we’ll look at a few policies, starting with validation.

ValidationWebhook Examples:

- Block Images with Latest Tag

- ReadOnly HostPath

- Workload Capability Check

Block Images with Latest Tag

Ideally, we don’t want to use a word like ‘latest’ for our container image that is deployed to production. We need to ensure that version 1.1 (for example) that has been tested from the dev environment, is the same image that gets deployed to production. Keep in mind that docker will add the ‘latest’ tag even if you don’t provide a tag. So the following two commands will result in the same.

$ docker build -t how2cloud/company_website .

$ docker build -t how2cloud/company_website:latest .

You can see how this can easily create confusion. When a developer makes a change, builds that image and pushes that image to your image registry, the latest image in production is no longer the latest one. Tagging an image with a version number, or a branch name is a better option to prevent confusion and wasted time troubleshooting a production issue.

Let’s use the following deployment as test application. It’s a simple busybox image (with a tag of ‘latest’).

Deployment YAML

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-deploy

namespace: how2cloud

spec:

replicas: 1

selector:

matchLabels:

app: test-deploy

template:

metadata:

labels:

app: test-deploy

spec:

containers:

- args:

- sleep

- "10000"

image: h2c-harbor.quix.co.za/kyverno-testing/busybox:latest

name: busybox

volumeMounts:

- mountPath: /test-pd

name: test-volume

readOnly: false

volumes:

- name: test-volume

hostPath:

path: /var/tmp

type: Directory

With the following policy we can prevent pods with an image tag of ‘latest’ to be created. A few things to take note of in the below policy; validationFailureAction is set to enforce, meaning the ValidationWebhook will block any pod request that does not conform to the rules. Kind is set to ClusterPolicy, this indicates that the policy is applied to the whole cluster.

Kyverno Cluster Policy - Disallow Latest Tag

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: disallow-latest-tag

annotations:

policies.kyverno.io/title: Disallow Latest Tag

policies.kyverno.io/category: Best Practices

policies.kyverno.io/severity: medium

policies.kyverno.io/subject: Pod

policies.kyverno.io/description: >-

The ':latest' tag is mutable and can lead to unexpected errors if the

image changes. A best practice is to use an immutable tag that maps to

a specific version of an application Pod. This policy validates that the image

specifies a tag and that it is not called `latest`.

spec:

validationFailureAction: enforce

background: true

rules:

- name: require-image-tag

match:

resources:

kinds:

- Pod

validate:

message: "An image tag is required."

pattern:

spec:

containers:

- image: "*:*"

- name: validate-image-tag

match:

resources:

kinds:

- Pod

validate:

message: "Using a mutable image tag e.g. 'latest' is not allowed."

pattern:

spec:

containers:

- image: "!*:latest"

Source: Disallow Latest Tag

Let’s see what happens when we try create our deployment.

$ kubectl apply -f busybox_latesttag.yml

deployment.apps/test-deploy created

$ kubectl get all -n how2cloud

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/test-deploy 0/1 0 0 10s

NAME DESIRED CURRENT READY AGE

replicaset.apps/test-deploy-76f455b7cc 1 0 0 10s

We can see our deployment was accepted, however our pods aren’t being created. Our replicaSet has a desired value of 1 and a current value of 0. Taking a closer look at the replicaSet.

$ kubectl describe rs -l app=test-deploy -n how2cloud

...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedCreate 52s replicaset-controller Error creating: admission webhook "validate.kyverno.svc-fail" denied the request:

resource Pod/how2cloud/test-deploy-76f455b7cc-z5xqq was blocked due to the following policies

disallow-latest-tag:

validate-image-tag: 'validation error: Using a mutable image tag e.g. ''latest''

is not allowed. Rule validate-image-tag failed at path /spec/containers/0/image/'

Warning FailedCreate 52s replicaset-controller Error creating: admission webhook "validate.kyverno.svc-fail" denied the request:

So Kyverno is doing its job, and blocking the pod from being created. We can improve on this policy and prevent the deployment from being created in the first place. Meaning, we failing faster, instead of having a successful deployment (from a Ci/Cd point of view), and the pod never starting.

$ cat kyverno/kv.latesttag.yml

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: disallow-latest-tag

annotations:

policies.kyverno.io/title: Disallow Latest Tag

policies.kyverno.io/category: Best Practices

policies.kyverno.io/severity: medium

policies.kyverno.io/subject: Pod

policies.kyverno.io/description: >-

The ':latest' tag is mutable and can lead to unexpected errors if the

image changes. A best practice is to use an immutable tag that maps to

a specific version of an application Pod. This policy validates that the image

specifies a tag and that it is not called `latest`.

spec:

validationFailureAction: enforce

background: true

rules:

- name: require-image-tag

match:

resources:

kinds:

- Pod

- Deployment

- DaemonSet

validate:

message: "An image tag is required."

pattern:

spec:

containers:

- image: "*:*"

- name: validate-image-tag

match:

resources:

kinds:

- Pod

- Deployment

- DaemonSet

validate:

message: "Using a mutable image tag e.g. 'latest' is not allowed."

pattern:

spec:

containers:

- image: "!*:latest"

With an updated policy we add Deployments and DaemonSets, to our resource list. Let’s test it out…

$ kubectl apply -f busybox_latesttag.yml

Error from server: error when creating "busybox_latesttag.yml": admission webhook "validate.kyverno.svc-fail" denied the request:

resource Deployment/how2cloud/test-deploy was blocked due to the following policies

disallow-latest-tag:

require-image-tag: 'validation error: An image tag is required. Rule require-image-tag

failed at path /spec/containers/'

validate-image-tag: 'validation error: Using a mutable image tag e.g. ''latest''

is not allowed. Rule validate-image-tag failed at path /spec/containers/'

ReadOnly HostPath

HostPath volumes are known to be quite insecure, but if you have to use it, setting it to readOnly would be a more secure option. We can use the following deployment yaml to test. Notice how we set deployment.spec.template.spec.containers.volumeMounts.readOnly to false. Also, if omitted, the readOnly parameter defaults to false.

Deployment YAML

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-deploy

namespace: how2cloud

spec:

replicas: 1

selector:

matchLabels:

app: test-deploy

template:

metadata:

labels:

app: test-deploy

spec:

containers:

- args:

- sleep

- "10000"

image: h2c-harbor.quix.co.za/kyverno-testing/busybox:1.28

name: busybox

volumeMounts:

- mountPath: /test-pd

name: test-volume

readOnly: false <<--- explicitly setting readOnly to false

volumes:

- name: test-volume

hostPath:

path: /var/tmp

type: Directory

Kyverno Cluster Policy - ReadOnly HostPath

We can now write Kyverno policy to block workloads that attempt to use writeable hostPath volumes. Some cluster-wide policies can be quite dangerous when deployed on a cluster without thorough testing. Some control-plane pods, especially fluentd, or other log collector type applications, will need writeable hostPath mounts. For this, we can add the exclude parameter.

For example (excluding the whole kube-system namespace):

spec:

rules:

name: match-pods-except-system

match:

any:

- resources:

kinds:

- Pod

exclude:

any:

- resources:

namespaces:

- kube-system

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: ensure-readonly-hostpath

annotations:

policies.kyverno.io/title: Ensure Read Only hostPath

policies.kyverno.io/category: Other

policies.kyverno.io/severity: medium

policies.kyverno.io/minversion: 1.5.0

policies.kyverno.io/subject: Pod

policies.kyverno.io/description: >-

Pods which are allowed to mount hostPath volumes in read/write mode pose a security risk

even if confined to a "safe" file system on the host and may escape those confines (see

https://blog.aquasec.com/kubernetes-security-pod-escape-log-mounts). The only true way

to ensure safety is to enforce that all Pods mounting hostPath volumes do so in read only

mode. This policy checks all containers for any hostPath volumes and ensures they are

explicitly mounted in readOnly mode.

spec:

background: false

validationFailureAction: enforce

rules:

- name: ensure-hostpaths-readonly

match:

resources:

kinds:

- Pod

preconditions:

all:

- key: "{{ request.operation }}"

operator: In

value:

- CREATE

- UPDATE

validate:

message: All hostPath volumes must be mounted as readOnly.

foreach:

# Fetch all volumes in the Pod which are a hostPath. Store the names in an array. There could be multiple in a Pod so can't assume just one.

- list: "request.object.spec.volumes[?hostPath][]"

deny:

conditions:

# For every name found for a hostPath volume (stored as `{{element}}`), check all containers, initContainers, and ephemeralContainers which mount this volume and

# total up the number of them. Compare that to the ones with that same name which explicitly specify that `readOnly: true`. If these two

# counts aren't equal, deny the Pod because at least one is attempting to mount that hostPath in read/write mode. Note that the absence of

# the `readOnly: true` field implies read/write access. Therefore, every hostPath volume must explicitly specify that it should be mounted

# in readOnly mode, regardless of where that occurs in a Pod.

any:

- key: "{{ request.object.spec.[containers, initContainers, ephemeralContainers][].volumeMounts[?name == '{{element.name}}'][] | length(@) }}"

operator: NotEquals

value: "{{ request.object.spec.[containers, initContainers, ephemeralContainers][].volumeMounts[?name == '{{element.name}}' && readOnly] [] | length(@) }}"

Source: Ensure Read Only hostPath

Once the above is applied, we can test it by deploying our busybox pod with a writeable hostPath volume:

$ kubectl apply -f busybox_hostpath.yml

Error from server: error when creating "busybox_hostpath.yml": admission webhook "validate.kyverno.svc-fail" denied the request:

resource Deployment/how2cloud/test-deploy was blocked due to the following policies

ensure-readonly-hostpath:

autogen-ensure-hostpaths-readonly: 'validation failure: All hostPath volumes must be mounted as readOnly.'

This gets us one step closer to running more secure workloads.

Workload Capability Check

The default capabilities that are added to a Docker container are deemed insecure by many, myself included. Check this link for the default capabilities. There are quite a number of them… Fewer capabilities, more security! We can limit the capabilities that one is allowed to add to any running container, with the following policy. With this we can ensure that only the NET_BIND_SERVICE and CHOWN capabilities are allowed.

Deployment YAML

Returning to our busybox deployment from earlier, we can set

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-deploy

namespace: how2cloud

spec:

replicas: 1

selector:

matchLabels:

app: test-deploy

template:

metadata:

labels:

app: test-deploy

spec:

containers:

- args:

- sleep

- "10000"

image: h2c-harbor.quix.co.za/kyverno-testing/busybox:latest

name: busybox

securityContext:

capabilities:

add: ["CHOWN", "NET_BIND_SERVICE", "SYS_TIME"]

Kyverno Cluster Policy - Workload Capability Check

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: psp-restrict-adding-capabilities

annotations:

policies.kyverno.io/title: Restrict Adding Capabilities

policies.kyverno.io/category: PSP Migration

policies.kyverno.io/severity: medium

kyverno.io/kyverno-version: 1.6.0

policies.kyverno.io/minversion: 1.6.0

kyverno.io/kubernetes-version: "1.23"

policies.kyverno.io/subject: Pod

policies.kyverno.io/description: >-

Adding capabilities is a way for containers in a Pod to request higher levels

of ability than those with which they may be provisioned. Many capabilities

allow system-level control and should be prevented. Pod Security Policies (PSP)

allowed a list of "good" capabilities to be added. This policy checks

ephemeralContainers, initContainers, and containers to ensure the only

capabilities that can be added are either NET_BIND_SERVICE or CAP_CHOWN.

spec:

validationFailureAction: enforce

background: true

rules:

- name: allowed-capabilities

match:

any:

- resources:

kinds:

- Pod

preconditions:

all:

- key: "{{ request.operation }}"

operator: NotEquals

value: DELETE

validate:

message: >-

Any capabilities added other than NET_BIND_SERVICE or CAP_CHOWN are disallowed.

foreach:

- list: request.object.spec.[ephemeralContainers, initContainers, containers][]

deny:

conditions:

all:

- key: "{{ element.securityContext.capabilities.add[] || '' }}"

operator: AnyNotIn

value:

- NET_BIND_SERVICE

- CHOWN

- ''

Source: Restrict Adding Capabilities

$ kubectl replace -f busybox_caps.yml --force

deployment.apps "test-deploy" deleted

Error from server: admission webhook "validate.kyverno.svc-fail" denied the request:

resource Deployment/how2cloud/test-deploy was blocked due to the following policies

psp-restrict-adding-capabilities:

autogen-allowed-capabilities: 'validation failure: Any capabilities added other

than NET_BIND_SERVICE or CAP_CHOWN are disallowed.'

MutatingWebhook Examples

- Add Labels to Workloads

- Disable AutomountServiceAccountToken on Service Accounts

Add Labels to our Workloads

To guarantee that all our workloads adhere to not only security standards, we can also add specific parameters to our objects, either for charge-back, or for conformity. To add labels to your objects, we can use the following policy. This is a mutating policy that add the label CostCenter=dev-5632 to all Pods, Secrets, ConfigMaps, and Services.

Kyverno Cluster Policy - Add Labels

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: add-labels

annotations:

policies.kyverno.io/title: Add Labels

policies.kyverno.io/category: Sample

policies.kyverno.io/severity: medium

policies.kyverno.io/subject: Label

policies.kyverno.io/description: >-

Labels are used as an important source of metadata describing objects in various ways

or triggering other functionality. Labels are also a very basic concept and should be

used throughout Kubernetes. This policy performs a simple mutation which adds a label

`foo=bar` to Pods, Services, ConfigMaps, and Secrets.

spec:

rules:

- name: add-labels

match:

resources:

kinds:

- Pod

- Service

- ConfigMap

- Secret

mutate:

patchStrategicMerge:

metadata:

labels:

CostCenter: dev-5632

Source: Add Labels

When deploying our favourite busybox pod from earlier, we can see that the running pod is now tagged with our CostCenter label.

$ kubectl get po -n how2cloud --show-labels

NAME READY STATUS RESTARTS AGE LABELS

test-deploy-7849d7bc55-sztr7 1/1 Running 0 6m24s CostCenter=dev-5632,app=test-deploy,pod-template-hash=7849d7bc55

We can expand on this policy for a real-world example. In a multi-tenant cluster, we will most likely have different cost centres deployed into different namespaces. To make this split we add a namespaceSelector field to our Kyverno rule, and also add a second rule for a different namespace and different CostCenter labels.

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: add-labels

annotations:

policies.kyverno.io/title: Add Labels

policies.kyverno.io/category: Sample

policies.kyverno.io/severity: medium

policies.kyverno.io/subject: Label

policies.kyverno.io/description: >-

Labels are used as an important source of metadata describing objects in various ways

or triggering other functionality. Labels are also a very basic concept and should be

used throughout Kubernetes. This policy performs a simple mutation which adds a label

`foo=bar` to Pods, Services, ConfigMaps, and Secrets.

spec:

rules:

- name: add-labels-dev

match:

resources:

kinds:

- Pod

- Service

- ConfigMap

- Secret

namespaceSelector:

matchExpressions:

- key: kubernetes.io/metadata.name

operator: In

values:

- "how2cloud-dev"

mutate:

patchStrategicMerge:

metadata:

labels:

CostCenter: dev-5632

- name: add-labels-qa

match:

resources:

kinds:

- Pod

- Service

- ConfigMap

- Secret

namespaceSelector:

matchExpressions:

- key: kubernetes.io/metadata.name

operator: In

values:

- "how2cloud-qa"

mutate:

patchStrategicMerge:

metadata:

labels:

CostCenter: qa-5635

Once we’ve deployed our two pods in the different namespaces, we can see them tagged with the separate CostCenter labels.

$ kubectl get po -n how2cloud-qa --show-labels

NAME READY STATUS RESTARTS AGE LABELS

test-deploy-7849d7bc55-sztr7 1/1 Running 0 6m24s CostCenter=qa-5635,app=test-deploy,pod-template-hash=7849d7bc55

$ kubectl get po -n how2cloud-dev --show-labels

NAME READY STATUS RESTARTS AGE LABELS

test-deploy-7849d7bc55-j7xf4 1/1 Running 0 6m37s CostCenter=dev-5632,app=test-deploy,pod-template-hash=7849d7bc55

Disable AutomountServiceAccountToken

Kubernetes creates a service account and token for every namespace that gets created. The token for this service account is then mounted inside every pod that doesn’t specify its own service account. This token can be used by the pod to access the Kubernetes API. Although the access of this default token is limited, it is considered best practice to only provide access where required. The following can be done either on pod level, meaning setting the automountServiceAccountToken to false in the pod spec, or on a service account level, both equally effective.

Kyverno Cluster Policy - Disable AutomountServiceAccountToken

apiVersion: kyverno.io/v1

kind: ClusterPolicy

metadata:

name: disable-automountserviceaccounttoken

annotations:

policies.kyverno.io/title: Disable automountServiceAccountToken

policies.kyverno.io/category: Other

policies.kyverno.io/severity: medium

policies.kyverno.io/subject: ServiceAccount

kyverno.io/kyverno-version: 1.5.1

kyverno.io/kubernetes-version: "1.21"

policies.kyverno.io/description: >-

A new ServiceAccount called `default` is created whenever a new Namespace is created.

Pods spawned in that Namespace, unless otherwise set, will be assigned this ServiceAccount.

This policy mutates any new `default` ServiceAccounts to disable auto-mounting of the token

into Pods obviating the need to do so individually.

spec:

rules:

- name: disable-automountserviceaccounttoken

match:

resources:

kinds:

- ServiceAccount

names:

- default

mutate:

patchStrategicMerge:

automountServiceAccountToken: false

Source: Disable automountServiceAccountToken

When creating a new namespace, we can inspect the accompanying default service account object’s yaml.

$ kubectl get sa -n how2cloud -o yaml

apiVersion: v1

items:

- apiVersion: v1

kind: ServiceAccount

metadata:

creationTimestamp: "2021-12-22T10:11:39Z"

name: default

namespace: how2cloud

resourceVersion: "468966153"

uid: x91210xx-23x9-47x2-x9x7-605285x8xx18

secrets:

- name: default-token-5tjxc

kind: List

metadata:

resourceVersion: ""

selfLink: ""

$ kubectl apply -f kyverno/kv.serviceAccount.yml

clusterpolicy.kyverno.io/disable-automountserviceaccounttoken created

$ kubectl get ns how2cloud -o yaml | kubectl replace --force -f -

namespace "how2cloud" deleted

namespace/how2cloud replaced

$ kubectl get sa -n how2cloud -o yaml

apiVersion: v1

automountServiceAccountToken: false <<---

kind: ServiceAccount

metadata:

annotations:

policies.kyverno.io/last-applied-patches: |

disable-automountserviceaccounttoken.disable-automountserviceaccounttoken.kyverno.io: added

/automountServiceAccountToken

creationTimestamp: "2022-03-16T09:17:09Z"

name: default

namespace: how2cloud

resourceVersion: "493682736"

uid: 1ca6e195-c22b-4f9d-a7fe-89d0d8faa704

secrets:

- name: default-token-lwj6t

We can see in the above output after the namespace we recreated, that our default service account now has the automountServiceAccountToken=false parameter set.

Conclusion

We have only covered mutating, and validating webhooks in this post. Kyverno has a whole host of additional features that can be used to comply with our standards. For additional networking security we can add a deny-all network policy whenever a new namespace is created, and users will need to write their own policies to only allow the necessary traffic.

A sync policy can eliminate unnecessary recreating of secrets in different namespaces. This is ideal when working with the same registry credentials throughout all the namespaces in the cluster. Or even a SSL cert for ingress. Kyverno can monitor a ‘parent’ secret, and if it would change, copy it to all namespaces. The same will apply for any new namespaces created.

Kyverno helps us enforce best-practice throughout our cluster, and prevent insecure workloads from running in our cluster and inadvertently places more workloads at risk. Kyverno can also be used to set a baseline for our workloads when it comes to governance in a distributed system like Kubernetes.

It’s important to keep in mind that mutating and validating webhooks is only a small part of securing the whole Kubernetes ecosystem. Securing the cluster nodes themselves, ETCD, the API, and ensuring good code practices are followed are all items on the “Run a Secure Cluster” checklist.