When it comes to securing your Kubernetes clusters, and the applications that run within it, there aren’t a lot of security features enabled ‘out-of-the-box’.

This isn’t necessarily a bad thing.

This ensures that the amount of work required to get your first application up and running remains minimal. But when we’re past the testing and development phase, we (of course) get to production. And that’s where the real fun starts.

There are a few options available to add layers of security to you cluster. Today we’ll take a closer look at Pod Security Policies (PSP). PSP comes standard with Kubernetes, disabled by default and is currently in Beta.

Open Policy Agent (OPA) is a third party solution that is gaining popularity - and could potentially be an replacement option in the case that pod security policies don’t make it to GA. We’ll take a closer look at OPA in a future post.

A brief description of Kubernetes Pod Security Policies

Official Docs

A Pod Security Policy (PSP) is a cluster-level resource that controls security sensitive aspects of the pod specification. The PodSecurityPolicy objects define a set of conditions that a pod must run with in order to be accepted into the system, as well as defaults for the related fields.

So, what does that mean?

Well, Pod Security Policies ensures that any pods in your cluster run within specific security boundaries. As a cluster-wide resource, this can’t be enabled only for specific namespaces. Once enabled, all new pods (or when existing ones are restarted) are evaluated against these security boundaries, and if they pass the security checks, they are allowed to run.

But what kind of boundaries are we talking about? I hear you ask… With a restrictive policy - more on this later - none of the containers are allowed added capabilities, the pods are only allowed to access specific Kubernetes resources, and pods aren’t allowed to use the host’s Linux namespaces (i.e. user or network). As an example. we can allow our pods to mount secrets, configMaps, and persistentVolumeClaims, but we do not allow any hostPaths to be mounted.

Somewhere during the journey of grasping container concepts, we’ve all probably thought: “Is the root user inside the container the same as the host?” and “If someone gained access to your containers, could they somehow elevate their privileges, and access files on the host?” In short, yes… and that is quite scary. For this reason - and more - is exactly what we’re trying to prevent with pod security policies.

The problem

This is what nightmares are made of. An extreme example, yes, but it does showcase what is possible. Let’s quickly demonstrate this on our LAB cluster. Doubt I have to say it; but for the record… THIS IS NOT FOR PRODUCTION USE.

As a user with kubectl access, create the script (from the above link) and make it executable. Run it. And this will drop you into a new prompt. Executing the ‘whoami’ command returns ‘root’. But this just means we are a root user inside the container? So…? Looking at the script, what it actually does is it mounts the host file-system, and executes bash. Wait! Ok, does this mean what I think it means? Yip…

From inside the container, we’re able to create files on the host’s root filesystem.

# inside the container

[root@sudo--test-cluster /]# touch /CreatingAFileOnRoot

[root@sudo--test-cluster /]# exit

pod "blackspy-sudo" deleted

# on the host

[root@test-cluster /]# ls -l /

total 28

[...]

-rw-r--r--. 1 root root 0 Dec 20 10:07 CreatingAFileOnRoot <-- Here it is

drwxr-xr-x. 19 root root 3760 Dec 20 10:15 dev

drwxr-xr-x. 80 root root 8192 Dec 20 10:11 etc

drwxr-xr-x. 4 root root 28 Dec 20 09:24 home

[...]

The Fix

We already know that PSPs will be able to help fix this rather critical problem, so let’s get started.

Check if PSPs are enabled for your kubeadm cluster

An easy way to check whether PSPs are enabled is to double-check the Kube-API Server’s configurations. On a kubeadm cluster, we can simply grep for it in the manifest file. As in the example below, you will see a comma-separated list of enabled plugins, and with ‘PodSecurityPolicy’ absent from the list, we know PSPs are not enabled for this cluster.

# grep admission /etc/kubernetes/manifests/kube-apiserver.yaml

- --enable-admission-plugins=NodeRestriction

Check if PSPs are enabled for your Rancher managed K8s cluster:

On the Kubernetes nodes, check if ‘PodSecurityPolicy’ is listed in the list of plugins in the below output:

$ docker inspect $(docker container ls | grep kube-apiserver | awk '{print $1}') | grep "enable-admission-plugins"

"--enable-admission-plugins=NamespaceLifecycle,LimitRanger,ServiceAccount,DefaultStorageClass,DefaultTolerationSeconds,MutatingAdmissionWebhook,ValidatingAdmissionWebhook,ResourceQuota,NodeRestrictio

n,Priority,TaintNodesByCondition,PersistentVolumeClaimResize,PodSecurityPolicy"

Enable PSPs

kubeadm - For our kubeadm cluster we can simply add ‘PodSecurityPolicy’ to the comma-seperated list, and save the file. Seeing as it’s a manifest file, once you save and exit, the Kube-API static pod will be restarted, and PSPs are enabled. You might not be able to get the status of your pods right away, cause obviously your API server is busy restarting. Keep in mind that static pods are node specific, so perform this step on all nodes.

# grep admission /etc/kubernetes/manifests/kube-apiserver.yaml

- --enable-admission-plugins=NodeRestriction,PodSecurityPolicy

# while true; do kubectl get pod -n kube-system; sleep 5; done

The connection to the server 10.10.7.10:6443 was refused - did you specify the right host or port?

NAME READY STATUS RESTARTS AGE

calico-kube-controllers-c9784d67d-4gnlz 1/1 Running 4 95d

calico-node-v6cbw 1/1 Running 4 95d

coredns-f9fd979d6-gmrms 1/1 Running 4 95d

coredns-f9fd979d6-xwnmv 1/1 Running 4 95d

etcd-test-cluster 1/1 Running 4 95d

kube-apiserver-test-cluster 0/1 ContainerCreating 0 95d

kube-controller-manager-test-cluster 1/1 Running 5 95d

kube-proxy-knr2v 1/1 Running 4 95d

kube-scheduler-test-cluster 0/1 Running 5 95d

# docker container ls | grep kube-api

02ec67ffcf0e ce0df89806bb "kube-apiserver --ad…" 3 seconds ago Up 2 seconds k8s_kube-apiserver_kube-apiserver-test-cluster_kube-system_e130d9af7a94d4a3fa4400b359d3a943_0

b919e9689f1c k8s.gcr.io/pause:3.2 "/pause" 5 seconds ago Up 4 seconds k8s_POD_kube-apiserver-test-cluster_kube-system_e130d9af7a94d4a3fa4400b359d3a943_0

Cloud Providers - If you are using a managed Kubernetes offering from a cloud provider, you obviously don’t have access to the API configs, they often provide additional advanced settings where you can enable PSPs in your cluster.

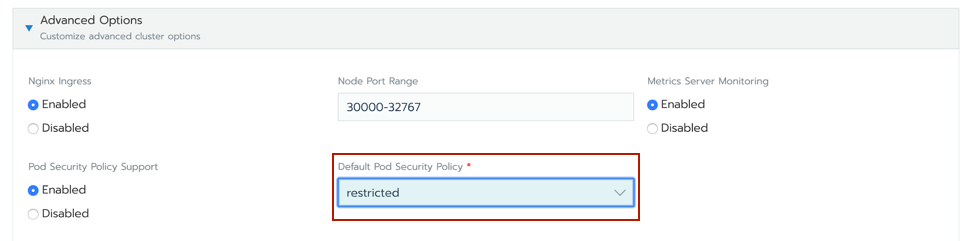

Rancher - This is probably the easiest. Edit the cluster in the GUI, and under ‘Advanced Options’ select a Default Pod Security Policy.

Just a note here: This is already enough to prevent someone from taking control of your cluster, like we demonstrated above. But we’ll need to write rules to at least allow our good pods into the cluster.

Let’s quickly test a simple nginx pod via a deployment.

[root@test-cluster /]# kubectl create deploy nginx -n test-ns --image=nginx

pod/test-pod created

[root@test-cluster /]# kubectl get pod -n test-ns

No resources found in test-ns namespace.

Ok. So our pod wasn’t created. Let’s look at the other resources that form part of our deployment.

# kubectl get all -n test-ns

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/nginx 0/1 0 0 4m58s

NAME DESIRED CURRENT READY AGE

replicaset.apps/nginx-6799fc88d8 1 0 0 4m58s

We can see our deployment created our a replicaset resource, but our replicaset wasn’t able to create the pod. Doing a describe on the replicaset we see the following:

[...]

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedCreate 56s (x17 over 6m24s) replicaset-controller Error creating: pods "nginx-6799fc88d8-" is forbidden: PodSecurityPolicy: no providers available to validate pod request

Looks like we’ll have to write some policy to allow some pods.

A preferred restrictive pod security policy:

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: super-restricted-psp

spec:

allowPrivilegeEscalation: false # Prevents process to gain more privileges than its parent

fsGroup: # Prevents GID 0 to be used by pods

ranges:

- max: 65535

min: 1

rule: MustRunAs

requiredDropCapabilities:

- ALL

runAsUser:

rule: RunAsAny

seLinux:

rule: RunAsAny

supplementalGroups: # Restrict supplemental GIDs to be non-zero

ranges:

- max: 65535

min: 1

rule: MustRunAs

volumes: # Only allow specific volumes

- configMap

- emptyDir

- projected

- secret

- downwardAPI

- persistentVolumeClaim

But what does it all mean!?

With the above policy we ensure that pods are quite ring-fenced. Let’s go through a few items:

- allowPrivilegeEscalation: false - Essentially we’re worried about containers processes without the

no_new_privsflag set. This flag controls whether a process can gain more privileges than its parent process. Setting this value to false, ensures that the no_new_privs flag is set on our pods. - fsGroup - Any supplemental groups added to the volumes, must fall in the range between 1 and 65535.

- requiredDropCapabilities - Docker provides a set of capabilities to a container by default. These are capabilities like; chown, kill, setgid, etc. (Full list here).

These are capabilities that, in majority of use-cases, and in my opinion, you won’t necessarily need for a running container - these tasks can be taken care of at build time.

With

requiredDropCapabilities: ALLwe only allow containers into the cluster that have dropped all their capabilities. This requires acap_drop: ALLparameter in the docker-compose file. Something like this:

frontend:

image: frontend

build:

context: .

ports:

- 2011:2011

volumes:

- ./frontend/src/:/frontend/

env_file: .env

cap_drop:

- ALL

If additional capabilities are required, I would suggest to drop all of them, and only add what is needed (cap_add). You will also need to create a custom PSP to match, otherwise, once again, the pod won’t be allowed to run.

- volumes - We only allow pods that want access to this list of resources. If a pod tries to mount a hostPath, it won’t be allowed to run.

Exceptions

You will, without a doubt, need to run pods that have more privileges than what is allowed in this policy above. As a simple example; a number of log shipping solutions requires the container run-time, to log container output to a file on the host of course (i.e. docker logging driver set to json-file). The log shipping pods then mount the host-path (where the logs are written to) and ship these to a Graylog, syslog, or ElasticSearch server.

Roles and their bindings

Additional to the above PSP, we will need to provide our pod’s service account access to the PSP, and this done with Roles and Rolebindings. In my opinion, the above policy is rather restrictive, and it could be treated as a default cluster policy. For our default cluster policy we could write cluster roles and cluster role bindings to give access to any service account in the cluster. If and when a deployment requires additional permissions, a custom ad-hoc PSP can be written, but shouldn’t necessarily be allowed to be used by all deployments as this could just put us in a similar exposed position as before (an open PSP to be used by everyone).

PSP <--> Role <--> RoleBinding <--> ServiceAccount

We will dig into this now, but essentially a PSP is linked to a service account via a Role and a RoleBinding.

First we link the PSP to a cluster role. And we can see below that our cluster role cr-use-restricted-psp is allowed to use the podsecuritypolicy called super-restricted-psp.

Cluster Role

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: cr-use-restricted-psp

rules:

- apiGroups: ['policy']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames:

- super-restricted-psp

With that done we create a ClusterRoleBinding where we tie our ClusterRole cr-use-restricted-psp to our system’s service accounts (all of them, current and future).

Cluster Role Binding

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: crb-use-restricted-role

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cr-use-restricted-psp

subjects:

- apiGroup: rbac.authorization.k8s.io

kind: Group

name: system:serviceaccounts

Adding all three objects (PodSecurityPolicy, ClusterRole and ClusterRoleBinding) to a yaml file, and kubectl apply it.

[root@test-cluster /]# kubectl apply -f restrictive-psp.yml

podsecuritypolicy.policy/super-restricted-psp created

clusterrole.rbac.authorization.k8s.io/cr-use-restricted-psp created

clusterrolebinding.rbac.authorization.k8s.io/crb-use-restricted-role created

We can see our newly created PSP with the following command:

[root@test-cluster /]# kubectl get psp

NAME PRIV CAPS SELINUX RUNASUSER FSGROUP SUPGROUP READONLYROOTFS VOLUMES

super-restricted-psp false RunAsAny RunAsAny MustRunAs MustRunAs false configMap,emptyDir,projected,secret,downwardAPI,persistentVolumeClaim

Looking Good!!

Let’s try run an nginx pod with our new security rules in place.

[root@test-cluster /]# kubectl run test-pod -n test-ns --image=nginx

pod/test-pod created

[root@test-cluster /]# kubectl get pod -n test-ns

NAME READY STATUS RESTARTS AGE

test-pod 0/1 CrashLoopBackOff 3 112s

[root@test-cluster /]# kubectl descibe test-pod -n test-ns

[...]

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 11m Successfully assigned test-ns/test-pod to test-cluster

Normal Pulled 11m kubelet, test-cluster Successfully pulled image "nginx" in 4.378131401s

Normal Created 10m (x4 over 11m) kubelet, test-cluster Created container test-pod

Normal Started 10m (x4 over 11m) kubelet, test-cluster Started container test-pod

Normal Pulling 9m46s (x5 over 11m) kubelet, test-cluster Pulling image "nginx"

Warning BackOff 103s (x46 over 11m) kubelet, test-cluster Back-off restarting failed container

[root@test-cluster ~]# kubectl logs test-pod -n test-ns

[...]]

/docker-entrypoint.sh: Configuration complete; ready for start up

2020/12/20 10:15:07 [emerg] 1#1: chown("/var/cache/nginx/client_temp", 101) failed (1: Operation not permitted)

nginx: [emerg] chown("/var/cache/nginx/client_temp", 101) failed (1: Operation not permitted)

Our pod failed to start, which in this case, is a good thing. Looking at the logs we can see that the nginx application tries to perform a chown (which is an added capability), and this is not allowed by our PSP. Cool! Success…

What if we want nginx to run: we have two options.

- If we don’t mind giving it the permissions that it needs, we can write a new PSP that allows the chown capability

- If we don’t want to give it the permissions that it needs, we will have to amend the container to match our security requirements. There are a number of unprivileged nginx and httpd container images available on dockerhub

For pods that don’t require these additional permissions and fall within our security boundaries, they will be allowed to run without any additional configuration.

Checking which PSP was assigned to my pod

When pod is allowed to run, the PSP that is assigned to the pod, is added as an annotation in the pod object yaml.

A quick For loop:

$ for pod in $(kubectl get pod -n <namespace> | grep -v NAME | awk '{print $1}'); do echo $pod; kubectl get pod $pod -n <namespace> -o yaml | grep psp; done

Or using labels and jsonpath selectors:

$ kubectl get pod -l <labelKey>=<labelValue> -n <namespace> -o jsonpath='{.items[*].metadata.annotations.kubernetes\.io/psp}'

How are pods evaluated against PSPs

If there are multiple policies available in the cluster, the PSP controller evaluates the pod’s capabilities against the policies that - its service account has access to - in alphabetical order, and uses the first policy that allows the pod to run.

If you want to specifically set a pod or deployment to use a custom PSP we can do that by specifying the service account in the deployment yaml. By not specifying a service account, the default service account in the namespace is used.

[...]

containers:

- image: containerRegistry/myRepo/imagename:v2.1.0

name: coolContainer

securityContext:

capabilities:

add:

- CHOWN

- SETGID

- SETUID

drop:

- ALL

volumeMounts:

- mountPath: "/storage"

name: container-storage

serviceAccountName: coolContainer-sa

Conclusion

Let’s take another look at that malicious pod from earlier. We can see that the pod isn’t even allowed to start and because of that it doesn’t drop the user into a bash prompt.

Doing a kubectl describe on the failed replica-set we see the following:

Error from server (Forbidden): pods "blackspy-sudo" is forbidden: PodSecurityPolicy: unable to validate against any pod security policy: []

That feels better! Way more secure…

But with anything in security, we need more layers! PSPs are by no means a silver bullet. Implementing PSPs your cluster is just one of the many layers needed to secure your container development life-cycle.